80% of AI pilots stall before production. Hugging Face helps you ship instead of babysitting a science project.

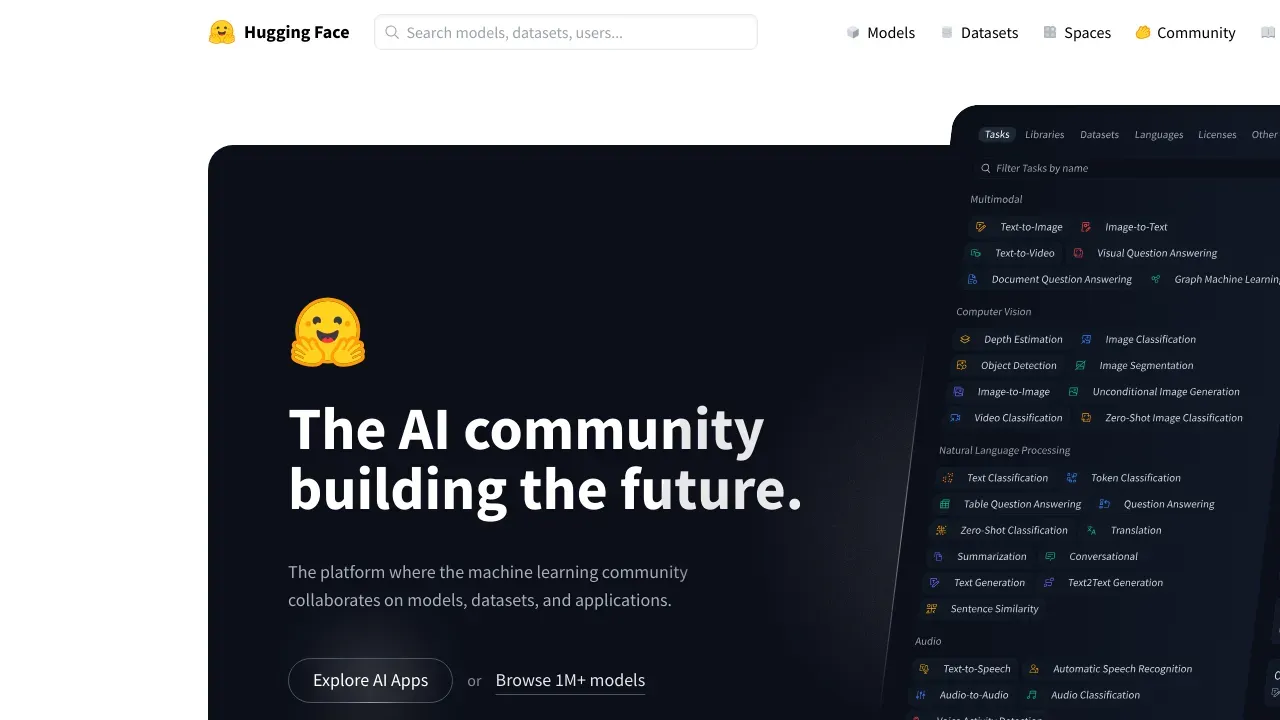

It is the model hub and MLOps stack used by teams that want real outcomes. Browse thousands of LLMs, vision, and audio models. Fine tune with Transformers, Diffusers, and PEFT, then push to production with Inference Endpoints that autoscale on your cloud. No mystery DevOps ritual required.

Spaces lets you spin up Gradio or Streamlit apps with GPU in minutes. Demo, collect feedback, and iterate fast. When it works, click deploy. When it flops, you learn cheaply. That is product velocity.

For business, the platform brings enterprise security, SSO, audit logs, private repositories, and role controls. Keep datasets private, run inside VPC, and stay compliant while you experiment.

You get an open ecosystem, not lock in. Use Python, PyTorch, TensorFlow, ONNX, and integrate with LangChain, Weights and Biases, and GitHub. Scale from scrappy proof of concept to global inference without rebuilding your stack.

Perfect for online businesses that need LLM deployment, RAG chatbots, image moderation, or product tagging now. Pick a model, fine tune, A/B test in a Space, then serve with Inference Endpoints. Faster conversions, fewer tickets, lower CAC. Less AI theater, more revenue.

Related keywords naturally covered here include model hub, fine tuning, generative AI, dataset hosting, MLOps, GPU inference, quantization, and Hugging Face Spaces.

Best features:

- Model Hub across NLP, vision, audio, and multimodal so you start from proven baselines and ship faster

- Inference Endpoints with autoscaling and private networking to deploy LLMs to production reliably

- Spaces with GPU backed Gradio or Streamlit apps to demo, iterate, and collect user feedback quickly

- Transformers, Diffusers, PEFT, and Tokenizers to fine tune and optimize models with less compute

- Datasets hosting and evaluations to version data and measure quality with repeatable MLOps

- Enterprise controls like SSO, audit logs, and role permissions to meet security and compliance

Ship real AI in days, not quarters, with an open stack your team actually understands.

Use cases:

- Launch a RAG customer support chatbot using an open LLM, deployed via Inference Endpoints

- Auto tag product images with a computer vision model to improve search and merchandising

- Generate on brand ad copy and emails by fine tuning a small language model for your niche

- Moderate UGC with text and image safety models to reduce manual review costs

- Spin up a sales demo Space to showcase an AI feature and capture leads in hours

- Extract entities from invoices and tickets to replace brittle regex workflows

Suited for:

Founders, growth teams, and lean engineering orgs who need working AI in production, not endless prototyping. Ideal when budget is tight, timelines are shorter than your coffee cooldown, and you want open source speed without vendor lock in.

Integrations:

- AWS SageMaker, Azure ML, Google Cloud Vertex AI, GitHub, Google Colab, Weights and Biases, LangChain, PyTorch, TensorFlow, ONNX, Docker, Kubernetes